Integrating NATS and JetStream: Modernizing Our Internal Communication

Discover how Brokee transformed its microservice architecture from a chaotic spaghetti model to a streamlined, reliable system by integrating NATS.io. Leveraging NATS request-reply, JetStream, queue groups for high availability, and NATS cluster mode on Kubernetes, we achieved clear communication, scalability, and fault-tolerant operations. Learn how NATS.io empowered us to build a robust event-driven architecture tailored for modern DevOps and cloud engineering needs.

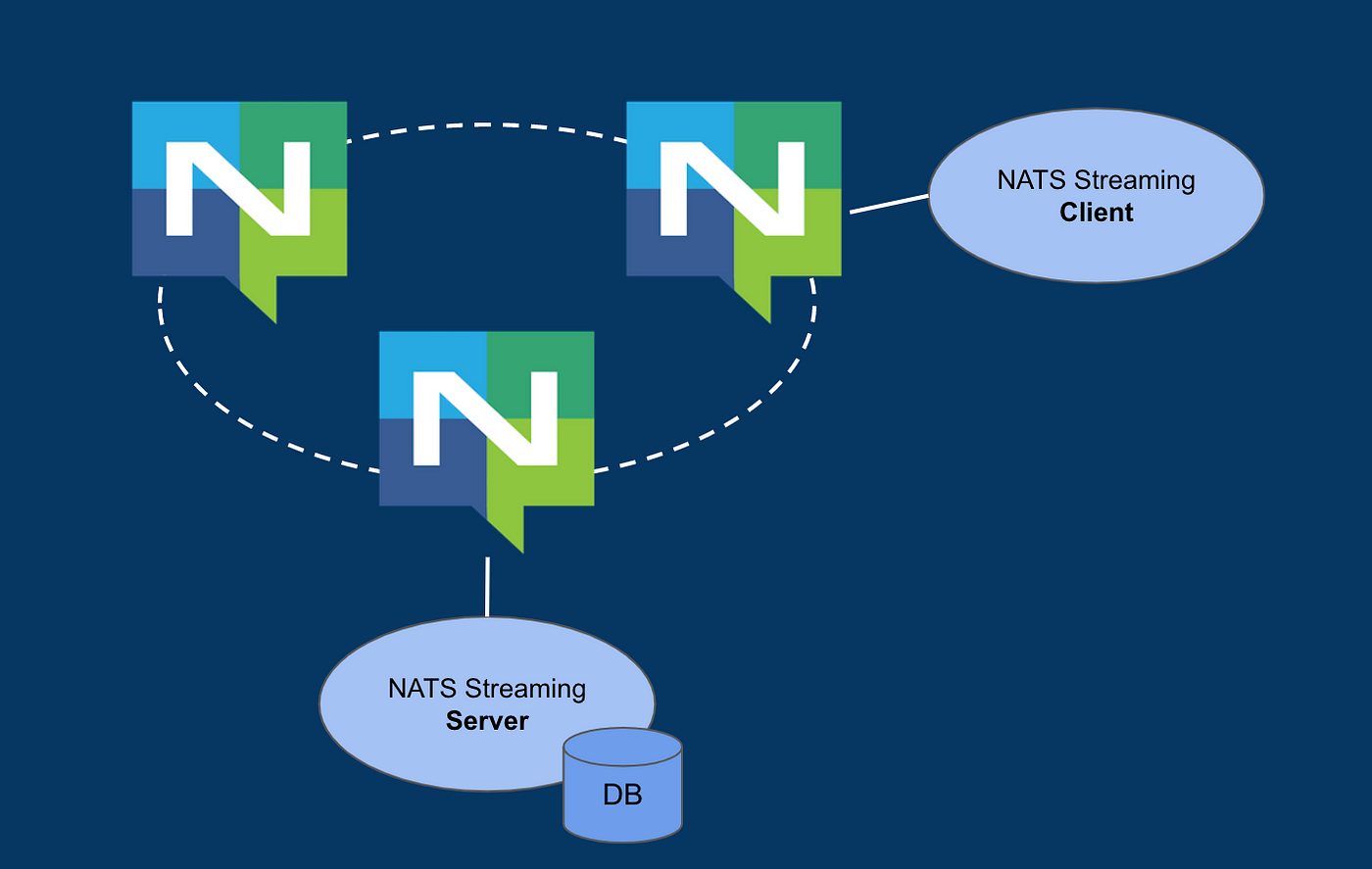

High-level NATS architecture

Introduction

Brokee was built using microservice architecture from day one as the initial focus for skills assessment was Kubernetes, and later we expanded to other technologies. At the same time, as new services were added, we sometimes took shortcuts with design decisions. Over the years, it resulted in a spaghetti architecture where many services were interconnected with each other and it became harder and harder to reason about dependencies and figure out which functionality should go to which service.

Discover how we improved our system's communication by integrating NATS messaging system and their JetStream functionality. We delve into the challenges we faced, the lessons we learned, and how we simplified our setup to make it more efficient. This integration has laid the foundation for a more scalable and resilient infrastructure, enabling us to adapt and innovate as our platform grows.

Why Change?

Our previous architecture relied heavily on a synchronous request-response model. While this served us well initially, it began to show limitations as our platform grew:

Scalability issues: Increasing traffic caused bottlenecks in our services.

Lack of flexibility: Adding new features required significant changes to the existing communication flow.

Reduced reliability: Single points of failure in the system led to occasional downtime.

Even though we use backoff and retry strategies in our APIs, requests can still fail if the server is unreachable, unable to handle them, or overwhelmed by too many requests. We needed a more robust, asynchronous system that could scale effortlessly. That’s when we turned to NATS and JetStream, which offered persistence.

Old architecture: tightly coupled services using synchronous request-response communication.

What is NATS and JetStream?

NATS is a lightweight, high-performance messaging system that supports pub/sub communication. JetStream extends NATS by adding durable message storage and stream processing capabilities, making it ideal for modern, distributed systems. For developers using the SDK, NATS offers support for a variety of programming languages, making it a flexible solution for integrating messaging capabilities.

With NATS and JetStream, we could:

Decouple services: Allow services to communicate without direct dependencies.

Enable persistence: Use JetStream’s durable subscriptions to ensure no messages are lost.

Simplify scaling: Seamlessly handle spikes in traffic without major architectural changes.

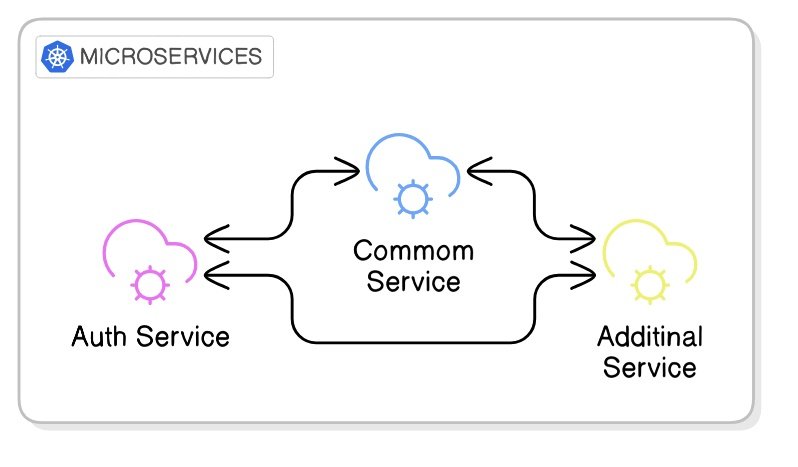

New architecture: decoupled services with asynchronous pub/sub communication via NATS.

The Integration Process

Here’s how we integrated NATS into our platform:

1. Setting Up NATS

We deployed NATS using Helm. Helm made the installation and configuration straightforward, allowing us to define resources and dependencies in a consistent, repeatable way.

To ensure reliability and scalability, we set up 3 running server instances of NATS, leveraging its clustering capabilities and the Raft consensus algorithm to handle increased traffic and provide fault tolerance.

For storage, we used persistent volumes, ensuring durability. NATS also offers the option to use memory-based storage. However, to optimize memory usage and prevent overload on our nodes, we decided to switch to persistent volume storage.

Additionally, we made the deployment more resilient by ensuring NATS instances were safely scheduled on separate nodes to avoid single points of failure and ensure high availability. We opted for the NATS headless service type as NATS clients need to be able to talk to server instances directly without load balancing.

config:

jetstream:

enabled: true

fileStore:

enabled: true

pvc:

enabled: true

size: 10Gi

storageClassName: premium-rwo-retain

cluster:

enabled: true

replicas: 3

statefulSet:

merge:

spec:

template:

metadata:

annotations:

cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

podTemplate:

topologySpreadConstraints:

kubernetes.io/hostname:

maxSkew: 1

whenUnsatisfiable: "DoNotSchedule"

2. Migrating to Pub/Sub

Our first step was replacing direct request-response calls with pub/sub communication. For example:

Before: Common Service would send an HTTP request directly to Auth Service and await a response.

After: Common Service publishes a message to the subject

auth.users.roles.assign, which is then processed asynchronously by the Auth Service that subscribes to this subject.

We incorporated the Request-Reply pattern, which NATS makes simple and efficient using its core pub/sub mechanism. In this pattern, a request is published on a subject with a unique "inbox" reply subject. Responders send their replies to the inbox, enabling real-time responses. This approach is particularly useful for scenarios requiring immediate feedback.

To distribute the workload randomly across multiple instances, the Auth Service subscribes as part of a queue group, ensuring messages are distributed to different instances. NATS automatically manages to scale responders through these groups and ensures reliability with features like "drain before exiting" to process pending messages.

In the next Golang example, we prepare a payload and publish it as a request to a mentioned subject from the Common service using NATS. This demonstrates how the Request-Reply pattern enables sending data to a subject and awaiting a response.

func NATSRequestAssignCompanyRoleForUser(

nc *nats.Conn,

userID string,

roleID string,

timeout int,

) error {

// subject -> 'auth.users.roles.assign'

subject := models.Nats.Subjects.UsersRoleAssign

payload := models.RoleAssignmentPayload{

UserID: userID,

RoleIDs: []string{roleID},

}

payloadBytes, err := json.Marshal(payload)

if err != nil {

return fmt.Errorf("failed to marshal payload: %w", err)

}

msg, err := nc.Request(subject, payloadBytes, time.Duration(timeout)*time.Second)

if err != nil {

return fmt.Errorf("failed to send NATS request: %w", err)

}

var response map[string]interface{}

if err := json.Unmarshal(msg.Data, &response); err != nil {

return fmt.Errorf("failed to unmarshal response: %w", err)

}

success, ok := response["success"].(bool)

if !ok || !success {

return fmt.Errorf("role assignment failed, response: %v", response)

}

return nil

}

In this example, we set up a subscriber with a queue group that listens to the same subject in the Auth service. The queue group ensures load balancing among subscribers, while the handler processes the requests with the relevant business logic, sending responses back to the requester.

func SubscribeToRoleAssignQueue(

nc *nats.Conn, handler func(msg *nats.Msg),

) error {

_, err := nc.QueueSubscribe(

models.Nats.Subjects.UsersRoleAssign,

models.Nats.Queues.UserRolesAssign,

func(msg *nats.Msg) {

handler(msg)

})

if err != nil {

return err

}

return nil

}

In a typical pub/sub setup, if a service fails or is unavailable, there’s no automatic way to repeat the message, and it can fail silently. To address this, we turned to JetStream, which provides message persistence and reliable delivery. With JetStream, even if a service goes down, messages can be reprocessed once the service is back online, ensuring no data is lost and improving overall system reliability.

3. Implementing JetStream

JetStream added persistence to our messaging:

Streams: We defined streams to capture messages, grouping related data for efficient processing. For example, an

stack.deletecould store all stacks destroying messages, ensuring messages are retained and available for subscribers even during downtime.In the example below, we defined a JetStream stream named

STACKSfor managing testing stack operations. It subscribes to a single subject,stack.deletebut multiple subjects can be specified. The stream has a 1GB storage limit (maxBytes) and uses file storage with three replicas for fault tolerance. The retention policy is set toworkqueue, ensuring messages are retained until processed, and once a message is acknowledged, it will be deleted from the stream. It connects to the specified NATS server instances for message handling.

apiVersion: jetstream.nats.io/v1beta2

kind: Stream

metadata:

name: stacks

spec:

name: STACKS

description: "Manage stack operations"

subjects: ["stack.delete"]

maxBytes: 1073741824

storage: file

replicas: 3

retention: workqueue

servers:

- "nats://nats-headless.nats-system:4222"

Durable Subscriptions: Services could subscribe to streams and resume from where they left off, ensuring no data loss.

To provide flexibility and control over JetStream and consumer (a component that subscribes to a stream and processes the messages stored in that stream), we manage configurations through a manifest chart using JetStream Kubernetes controller called NACK, minimizing the need for code editing and rebuilding.

In the code, only minimal edits are required for specifying the subject, consumer, and queue group names. This approach ensures the configuration of streams and consumers is easily adjustable.

Additionally, we use push mode for streams, where messages are handled when placed in the queue. For durable queue consumers, the consumer and delivery group names must be the same to maintain consistency and work as expected.

Backoff and Acknowledgments: We use backoff in consumer configuration to control the number of retry attempts for message redelivery. Additionally, we set

ackWaitandmaxDeliverto define how long to wait before knowing if a message is acknowledged and after will be delivered.In some places, we use backoff, while in others, we use

ackWaitwith maxDeliver. You can use either backoff orackWait, but not both together: for multiple retries, backoff is preferred; for fewer retries,ackWaitis set to the execution time of your handler plus an additional 20-30% buffer, ensuring sufficient time to prevent premature exits and unacknowledged message.We also manually acknowledge messages after executing code, particularly in cases where validation fails due to invalid data, as there’s no need to redeliver the message. This helps to avoid unnecessary retries.

The next configuration sets up a JetStream consumer named

stack-deletefor the deletion of infrastructure stacks. It subscribes to thestack.deletesubject same as in stream subjects(viafilterSubject) and uses a durable nameSTACK_DELETE, ensuring message delivery resumes from where it left off.

apiVersion: jetstream.nats.io/v1beta2

kind: Consumer

metadata:

name: stack-delete

spec:

ackPolicy: explicit

ackWait: 20m

deliverGroup: STACK_DELETE

deliverSubject: deliver.stack.delete

deliverPolicy: all

description: Delete stack resources

durableName: STACK_DELETE

filterSubject: stack.delete

maxAckPending: 1000

maxDeliver: 5

replayPolicy: instant

servers:

- "nats://nats-headless.nats-system:4222"

streamName: STACKS

An example of using backoff instead of ackWait: By setting the desired retry interval instead of using ackWait, we ensure the total backoff interval is less than the maxDeliver value, or it will fail during creation/update. If there’s free interval capacity, it will reattempt with the last backoff interval.

...

spec:

ackPolicy: explicit

backoff:

- 1m

- 5m

- 10m

Key settings include:

ackPolicy: Explicit acknowledgment ensures messages are redelivered if not acknowledged.

ackWait: Set to 20 minutes to accommodate infrastructure destruction that can take up to 10-15 minutes in some cases.

deliverGroup & deliverSubject: Enables queue group-based delivery to

STACK_DELETE, ensuring load balancing among subscribers.maxAckPending: Limits unacknowledged messages to 1,000.

maxDeliver: Allows up to 5 delivery attempts per message, retrying every 20 minutes. If the message is not acknowledged after 5 attempts, it will remain in the stream.

replayPolicy: Instant replay delivers messages as quickly as possible.

servers: The consumer connects to the

STACKSstream on specified NATS servers for processing messages.

Next, we send a message to the stack.delete subject to request the deletion of a stack (the following example is written in Python). The process is straightforward: we create a message with the necessary information (userhash and test_id), and then publish it to the NATS server. Once the message is sent, we close the connection and return a response indicating whether the operation was successful or not.

async def delete_infra_stack(

userhash: str,

test_id: str,

) -> Dict[str, str]:

try:

nc = NATS()

await nc.connect(servers=[NATSConfig.server_url])

message = {"candidateId": userhash, "testId": test_id}

await nc.publish(

subject=NATSConfig.sub_stack_delete,

payload=json.dumps(message).encode("utf-8"),

)

await nc.close()

response = {

"success": True,

"message": f"Published {NATSConfig.sub_stack_delete} for {userhash}-{test_id}",

}

except Exception as e:

response = {

"success": False,

"message": str(e),

}

return response

In the next code snippet written in Golang (we use multiple languages for our backend code), the consumer subscribes to the stack.delete subject using the STACK_DELETE durable name. This allows the consumer to handle stack deletion requests while maintaining message persistence and retry logic as configured in JetStream. As you may notice subscribing is pretty straightforward as we manage the consumer configuration through the chart, which simplifies setup and allows easy adjustments without complex code changes.

func SubscribeToJSDestroyStack(js nats.JetStreamContext, svc Service) error {

subject := Nats.Subjects.StackDelete

durableName := Nats.DurableName.StackDelete

_, err := js.QueueSubscribe(subject, durableName, func(msg *nats.Msg) {

handleDeleteStack(msg, svc)

}, nats.Durable(durableName), nats.ManualAck())

if err != nil {

return fmt.Errorf("Error subscribing to %s: %v", subject, err)

}

return nil

}

func handleDeleteStack(msg *nats.Msg, svc Service) {

var req deleteStackRequest

if err := json.Unmarshal(msg.Data, &req); err != nil {

// ack on bad request data

msg.Ack()

return

}

if _, err := svc.DeleteStack(context.Background(), req.TestId, req.CandidateId, msg); err == nil {

// ack on success

msg.Ack()

}

}

4. Testing and Optimisation

We rigorously tested the system under load to ensure reliability and fine-tuned the configurations for optimal performance. Through this process, we identified the ideal settings for our message flow, ensuring efficient redelivery and minimal retries.

Challenges and Lessons Learned

Integrating NATS into our system posed several challenges, each of which provided valuable lessons in how to leverage NATS' features more effectively:

Request/Reply and Durable Subscriptions:

Initially, we thought the request/reply pattern would work well for durable subscriptions, as it seemed like a good way to ensure that every request would be retried in case of failure. However, we quickly realized that request/reply is more suited for real-time, immediate communication rather than long-term durability.

For durability, JetStream turned out to be the better option, as it ensures messages are stored persistently and retried until successfully processed. However, JetStream only delivers each message to a single designated consumer (the one configured to handle it), rather than broadcasting it to all subscribers.

Consumer and Queue Group Names:

We learned that for durable consumers to function properly, the consumer name and the queue group must be the same. If they don't match, the consumer won't subscribe to the stream, leading to issues in message delivery and distribution.This realization came after some trial and error. We tried subscribing to durable subscriptions but encountered errors. To understand what went wrong, we dug into the source code of the SDK and discovered the importance of matching the consumer name and queue group. Surprisingly, we didn’t find this mentioned clearly in the documentation, or perhaps we missed it.

Backoff vs. AckWait:

At first, we experimented with using both backoff and ackWait together, thinking it would allow us to fine-tune the retry behavior. We expected ackWait to control the waiting period for message acknowledgment, and then back off would manage retries with delays.

We first applied changes to the settings through Helm, and there were no errors, so we thought the changes were successfully applied. However, during testing, we noticed that the behavior wasn't as expected. When we checked the settings using NATS-Box Kubernetes pod, we found that the changes hadn’t taken effect. We then tried to edit the configurations directly in NATS-Box but encountered an error stating that the settings were not editable. This led to further investigation, as we realized that only one of either ackWait or backoff should be used to make it work.

Manual Acknowledgment:

One of the key lessons was the importance of manual acknowledgment. During our tests, we encountered situations where, even though the handler failed for some subscriptions, the message was still automatically acknowledged.

For instance, when an internal server error occurred, the message was considered acknowledged even though it wasn’t fully processed. We initially assumed that the acknowledgment would happen automatically if the message was successfully handled, similar to how HTTP requests typically behave.

However, when we moved to manual acknowledgment and controlled the timing ourselves, it worked perfectly. This change prevented false positives and ensured that messages weren’t prematurely acknowledged, even when an error or timeout occurred.

Testing with NATS-Box:

NATS-Box(available as part of NATS deployment) became an invaluable tool for us in testing and creating configurations. It allowed us to experiment and understand the impact of different settings on system behavior, helping us refine our approach to ensure optimal performance in real-world scenarios.

As we mentioned earlier, it helped us uncover small misunderstandings and nuances that weren't immediately obvious, giving us a deeper insight into how our configurations were being applied.

Conclusion

In conclusion, integrating NATS into our system proved to be a fast and efficient solution for our messaging needs. It wasn't without its challenges, but through testing and exploration, we were able to fine-tune the configurations to fit our needs. While we started with a simple setup, we may expand the use of NATS beyond internal communication to incorporate more features like monitoring and dead-letter queues. Additionally, we are considering replacing more of our internal architecture communication with NATS' pub/sub, and even potentially using NATS for external communication, replacing some of our REST APIs.

Based on our experience, using NATS with JetStream for durable messaging has proven to be a solid solution for ensuring reliable communication in our system. If you're looking to improve your system’s communication and explore event-driven architecture, we recommend considering NATS as a scalable and dependable choice, particularly for internal communication needs.

The Essential Skills Every DevOps Engineer Needs to Succeed in 2025

To stand out in 2025, DevOps engineers must master both technical skills and soft skills. We've gathered a breakdown of the essential skills every DevOps engineer should focus on.

1. Cloud Proficiency (AWS, Azure, GCP)

Understanding Cloud Platforms

Proficiency in cloud platforms like AWS, Azure, and Google Cloud Platform (GCP) is critical for any DevOps engineer. This includes tasks such as deploying infrastructure, managing services, and monitoring cloud environments.

Why Cloud Skills Matter

With companies shifting to the cloud, being skilled in cloud platforms is no longer optional. Engineers need hands-on experience with infrastructure-as-code, serverless applications, and cloud monitoring to ensure efficiency and scalability.

Brookee’s cloud-based DevOps assessments give engineers real-world, hands-on experience with AWS, Azure, and GCP preparing them for the tasks they’ll face on the job. Try it for free.

2. Continuous Integration and Continuous Deployment (CI/CD)

Mastering CI/CD Tools

CI/CD tools like Jenkins, GitLab CI, and GitHub Actions allow for automating code integration and deployment. Engineers should be proficient in setting up and maintaining pipelines to streamline the software delivery process.

Why CI/CD Skills Matter

CI/CD practices reduce deployment risks, accelerate feedback loops, and help deliver software updates frequently and reliably.

3. Containerization and Orchestration (Docker, Kubernetes)

Building and Orchestrating Containers

With the rise of microservices and cloud-native architectures, engineers need to be skilled in Docker for containerization and Kubernetes for orchestrating and scaling these containers.

Why Containerization Skills Matter

Containers allow consistent deployment across environments, and Kubernetes automates the scaling and management of these containers, ensuring uptime and reliability.

4. Infrastructure as Code (IaC)

Automating Infrastructure

Using Terraform and Ansible, engineers can automate infrastructure provisioning and management. This approach ensures consistency and makes it easy to manage infrastructure at scale.

Why IaC Skills Matter

By treating infrastructure as code, you can automate deployment and ensure version control, which leads to more predictable and reliable systems.

5. Monitoring and Observability

Implementing Monitoring Tools

Proficiency with tools like Prometheus, Grafana, and ELK Stack is essential for setting up monitoring and observability frameworks. Engineers need to track performance metrics and respond to incidents before they become critical.

Why Monitoring Matters

Proactive monitoring ensures system reliability and reduces downtime by identifying issues early.

6. Automation and Scripting

Automating Workflows

Knowing how to script in languages like Python, Bash, or PowerShell is crucial for automating repetitive tasks, configuring systems, and managing cloud environments.

Why Automation Matters

Automation saves time, reduces human error, and frees up engineers to focus on more strategic tasks.

7. Security (DevSecOps)

Embedding Security in DevOps

Security is now a core part of the DevOps process, known as DevSecOps. Engineers must be familiar with security practices, including vulnerability scanning, encryption, and automated security testing to safeguard infrastructure.

Why Security Skills Matter

In a world where breaches can cripple companies, embedding security into the DevOps process is crucial for both compliance and safeguarding sensitive data.

8. Soft Skills: Communication and Collaboration

Working Across Teams

Beyond technical expertise, DevOps engineers need excellent communication and collaboration skills. This ensures that operations and development teams work smoothly together to solve problems and meet objectives.

Why Soft Skills Matter

DevOps is about breaking down silos. Engineers who can communicate effectively and collaborate with cross-functional teams will thrive in complex, fast-paced environments.

Ready for DevOps Success?

In 2025, successful DevOps engineers will need a blend of technical expertise and communication skills to thrive. By mastering cloud platforms, CI/CD, automation, and security, engineers will be ready to make a significant impact from day one.

If you’re looking to sharpen your skills, consider Brokee as your secret weapon. Brokee’s DevOps assessments mirror real-world cloud environments, giving engineers and companies the hands-on experience they need to succeed.

7 Signs You Need to Hire a DevOps Engineer in 2025

Let's dive into why DevOps is crucial for businesses seeking to improve their development processes and enhance productivity. Also, we'll explore the 7 signs that show your business needs a DevOps engineer.

Discover the top 7 signs that indicate your business needs to hire a DevOps engineer. Plus, we'll explore why DevOps is crucial for businesses seeking to improve their development processes and enhance productivity in 2025.

What is DevOps and Why is it Important?

A modern approach to software development, DevOps combines development and operations to streamline the software delivery process.

Definition of DevOps

DevOps, a combination of "development" and "operations," refers to a set of practices, principles, and cultural philosophies aimed at improving collaboration and communication between software development (Dev) and IT operations (Ops) teams.

The primary goal of DevOps is to streamline the software delivery lifecycle, from initial development through testing, deployment, and maintenance, fostering a more efficient and collaborative approach to delivering high-quality software at a faster pace.

A DevOps approach emphasizes automation, continuous integration, continuous delivery, and a culture of shared responsibility, enabling organizations to respond rapidly to changing business requirements and deliver value to end-users more effectively.

Why You Need DevOps Engineers

Signs That Indicate You Need to Hire a DevOps Team

1. Deployment Failures and Slow Time-to-Market:

Frequent deployment failures coupled with a slow time-to-market can be indicative of underlying inefficiencies in the development and deployment pipeline. Delays in bringing products or features to market can significantly impact your competitiveness. A DevOps engineer can address this by implementing robust CI/CD pipelines, ensuring quicker and more reliable releases.

2. Ineffective Collaboration and Low Developer Productivity:

Issues in collaboration between development and operations teams can lead to low developer productivity. A DevOps engineer excels in fostering collaboration, breaking down silos, and implementing automation to enhance overall productivity.

3. Manual and Repetitive Processes

If your organization relies heavily on manual and repetitive processes for tasks like configuration management, provisioning, and scaling, a DevOps engineer can automate these processes, saving time and reducing the risk of human error.

4. High Infrastructure Costs

Rising infrastructure costs without a clear understanding of resource utilization may signal the need for a DevOps engineer. These professionals can optimize infrastructure, implement cloud solutions, and leverage containerization technologies to ensure efficient resource allocation.

5. Lack of Monitoring and Logging

Insufficient monitoring and logging can lead to delayed identification and resolution of issues, affecting system reliability. A DevOps engineer can implement robust monitoring and logging solutions to proactively detect and address potential problems.

6. Security Concerns

Security is a critical aspect of any IT infrastructure. If your organization is grappling with security vulnerabilities or lacks a comprehensive security strategy, a DevOps engineer can integrate security measures into the development and deployment processes, ensuring a more secure and resilient system.

7. Scalability Challenges with Increasing Workloads:

As your business grows, scalability challenges may arise. A DevOps engineer is well-equipped to design scalable architectures, implement auto-scaling solutions, and ensure your systems can handle increased workloads seamlessly.

DevOps Versus Traditional Software Development

The evolution of development and operations teams in DevOps marks a significant departure from traditional software development methods. DevOps culture emphasizes collaboration, transparency, and shared ownership, leading to a more cohesive and efficient development process.

When it comes to the difference between roles, DevOps engineers focus on the development and maintenance of software release systems, collaboration with the software development team, aligning with industry standards, and employing DevOps tools.

In contrast, software engineers focus on developing applications and solutions that operate on different operating systems, catering to specific user needs.

Read More: Cloud Engineer vs Software Engineer

The Role of a Full-Time DevOps Engineer

A DevOps engineer plays a pivotal role in driving the successful implementation of DevOps practices within an organization. Their key responsibilities include promoting collaboration between development and operations teams, automating processes to streamline product delivery, and ensuring the continuous integration and deployment of software.

Organizations need DevOps engineers to harness the full potential of DevOps principles. With their expertise in automation, deployment, and infrastructure management, a dedicated DevOps engineer can transform a conventional development team into a highly efficient and productive unit.

How many DevOps engineers do you need for your team? While there's not an official number, the ideal Developer to DevOps engineer ratio is 5:1, and in large software organizations, like Google, the ratio is 6:1.

The Benefits of DevOps for Your Business

Benefits of DevOps

Employing a DevOps engineer or team offers numerous benefits, including:

Improved deployment frequency

Faster time to market

Lower failure rate of new releases

Shortened lead time between fixes

Enhanced developer productivity

Contribution to delivering more reliable and customer-friendly software

Positive shift in customer satisfaction and overall product quality

Additionally, for most modern businesses that have moved to the cloud – such as GPC, AWS, or Azure-- DevOps engineers play a crucial role in managing and optimizing cloud environments. Their expertise extends to various aspects of cloud infrastructure and services, such as FinOps, automation, CI/CD, and more, and they contribute significantly to the effective implementation of DevOps practices in the cloud.

These advantages make having a DevOps engineer or team essential for organizations aiming to stay competitive in a rapidly evolving technological landscape.

Importance for Modern Businesses

DevOps is rapidly transitioning from a specialized approach to a mainstream strategy. Its adoption soared from 33% of companies in 2017 to an estimated 80% in 2024, mirroring the broader shift towards cloud computing.

The importance of DevOps in modern businesses cannot be overstated. It is instrumental in creating a culture of collaboration and shared responsibility among development and operations teams. This approach fosters a more efficient and effective workflow, leading to faster product development and deployment.

Read More: Essential DevOps Statistics and Trends for Hiring in 2025

Challenges of DevOps Implementation

Identifying the challenges of DevOps adoption is crucial for businesses looking to implement this methodology successfully. Common obstacles include resistance to change, cultural barriers within the organization, the cost of hiring DevOps engineers, and the complexity of integrating new tools and processes into existing workflows.

To overcome obstacles in implementing DevOps, organizations need to focus on fostering a culture of collaboration and openness to change. Encouraging transparency and communication across teams, providing adequate training and resources, and gradually introducing DevOps practices can help mitigate these challenges.

Another difficulty is hiring high-quality DevOps engineers who can do this complicated and highly technical work. A best practice for hiring DevOps engineers is to use DevOps assessments to ensure you select high-caliber candidates.

Read More: Case Study: DevOps Assessments Streamlined EclecticIQ's Hiring Process

Many Businesses are Adopting Cloud and DevOps Processes

Future of DevOps

The future of DevOps is significant, especially as businesses increasingly rely on software development to drive innovation and competitiveness.

By 2025, over 85% of organizations are expected to adopt a cloud computing strategy, aligning closely with the integration of DevOps practices. The need to hire a DevOps engineer is more pronounced than ever, given the rapid evolution of technology and the increasing demand for scalable and reliable software solutions.

Embracing DevOps is not just an option; it is a necessity for organizations striving to stay relevant and agile in a constantly evolving marketplace.

How We Reduced Our Google Cloud Bill by 65%

Learn how we reduced our Google Cloud costs by 65% using Kubernetes optimizations, workload consolidation, and smarter logging strategies. Perfect for startups aiming to extend their runway and save money.

Google Cloud Cost Reduction

Introduction

No matter if you are running a startup or working at a big corporation, keeping infrastructure costs under control is always a good practice. But it’s especially important for startups to extend their runway. This was our goal.

We just got a bill from Google Cloud for the month of November and are happy to see that we reduced our costs by ~65%, from $687/month to $247/month.

Most of our infrastructure is running on Google Kubernetes Engine (GKE), so most savings tips are related to that. This is one of those situations on how to optimize at a small scale, but most of the things can be applied to big-scale setups as well.

TLDR

Here’s what we did, sorted from the biggest impact to the least amount of savings:

Almost got rid of stable on-demand instances by moving part of the setup to spot instances and reducing the amount of time stable nodes have to be running to the bare minimum.

Consolidated dev and prod environments

Optimized logging

Optimized workload scheduling

Some of these steps are interrelated, but they have a specific impact on your cloud bill. Let’s dive in.

Stable Instances

The biggest impact on our cloud costs was running stable servers. We needed them for several purposes:

some services didn’t have a highly available (HA) setup (multiple instances of the same service)

some of our skills assessments are running inside a single Kubernetes pod and we can’t allow pod restarts or the progress of the test will be lost

we weren’t sure if all of our backend services could handle a shutdown gracefully in case of a node restart

For services that didn’t have a HA setup, we had the option to explore HA setup were possible (this often requires installing additional infrastructure components, especially for stateful applications, which in turn drives infrastructure costs up); migrating the service to a managed solution (e.g. offload Postgres setup to Google Cloud instead of managing it ourselves); accept that service may be down for 1-2 minutes a day if it’s not critical for the user experience.

For instance, we are running a small Postgres instance on Google Cloud and the load on this instance is very small. So, when some other backend component needs Postgres, we create a new database on the same instance instead of spinning up another instance on Google Cloud or running a Postgres pod on our Kubernetes cluster.

I know this approach is not for everyone, but it works for us as several Postgres databases all have a very light load. And remember, it’s not only about cost savings, this also allows us not to think about node restarts or basic database management.

At the same time, we are running a single instance of Grafana (monitoring tool). It’s not a big deal if it goes down during node restart as it is our internal tool and we can wait a few minutes before it comes back to life if we need to check some dashboards. A similar approach to the ArgoCD server that handles our deployments - it doesn’t have to be up all the time.

High Availability Setup

Here’s what we did for HA of our services on Kubernetes to be able to get rid of stable nodes, this can be applied to the majority of services:

created multiple replicas of our services (at least 2), so if one pod goes down, another one can serve traffic

configured pod anti-affinity based on the node name, so our service replicas are always running on different nodes:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- pgbouncer

topologyKey: kubernetes.io/hostname

added PodDistributionBudget with a minimum of 1 available pod (for services with 2 replicas). This doesn’t guarantee protection, but as we have automated node upgrades enabled, it can prevent GKE from killing our nodes when we don’t have a spare replica ready

reviewed terminationGracePeriodSeconds settings for each service to make sure applications have enough time to shut down properly

updated code in some apps to make sure they could be shut down unexpectedly. This is a separate topic, but you need to make sure no critical data is lost and you can recover from whatever happens during node shutdown

moved these services to spot instances (the main cost-savings step, the other steps were just needed for reliable service operations)

Experienced Kubernetes engineers can suggest a few more improvements, but this is enough for us right now.

Temporary Stable Instances

Now we come to the part about our skills assessments that need stable nodes. We can’t easily circumvent this requirement (yet, we have some ideas for the future).

We decided to try node auto-provisioning on GKE. Instead of having always available stable servers, we would dynamically create node pools with specific characteristics to run our skills assessments.

This comes with certain drawbacks - candidates who start our skills assessments have to wait an extra minute while the server is being provisioned compared to the past setup where stable servers were just waiting for Kubernetes pods to start. It’s not ideal, but considering it saves us a lot of money, it’s acceptable.

As we want to make sure no other workloads are running on those stable nodes, we use node taints and tolerations for our tests. Here’s what we add to our deployment spec:

nodeSelector:

type: stable

tolerations:

- effect: NoSchedule

key: type

operator: Equal

value: stable

We also add resource requests (and limits, where needed), so auto-provisioning can select the right-sized node pool for our workloads. So, when there is a pending pod, auto-provisioning creates a new node pool of specific size with correct labels and tolerations:

Node Taints and Labels

Our skills assessment are running a maximum of 3 hours at a time and then automatically removed, which allows Kubernetes autoscaler to scale down our nodes.

There are a few more important things to mention. You need to actively manage resources for you workloads or pods may get evicted by Kubernetes (kicked out of the node because they are using more resources than they should).

In our case, we are going through each skill assessment we develop and take a note of resource usage to define how much we need. If this was an always-on type of workload, we could have deployed vertical pod autscaler that can provide automatic recommendations of how much resources you need based on resource usage metrics.

Another important point, is that sometimes autoscaler can kick in and remove the node if the usage if quite low, so we had to add the following annotation to our deployments to make sure we don’t get accidental pod restarts:

spec:

template:

metadata:

annotations:

cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

All of this allows us to have temporary stable nodes for our workloads. We use backend service to remove deployments after 3 hours maximum, but GKE auto-provisioning has its own mechanism where you can define how long these nodes can stay alive.

Optimizations

While testing this setup, we noticed that auto-provisioning was not perfect - it was choosing a little too big nodes for our liking.

Another problem, as expected, creating new node pools for every new workload takes some extra time, e.g. it takes 1m53s for a pending pod to start on an existing node pool vs 2m11s on a new node pool.

So, here’s what we did to save a bit more money:

pre-created node pools of multiple sizes with 0 nodes by default and autoscaling enabled. All of these have the same labels and taints, so autoscaler chooses the most optimal one. This saves us a bit of money vs node auto-provisioning

choose older instance types, e.g. N1 family vs N2 which is newer but a bit more expensive. Saved some more money

Plus, got faster test provisioning as node pools are already created, and we still have auto-provisioning as a backup option in case we forget to create a new node pool for future tests.

The last thing I wanted to mention here, we were considering 1-node per test semantics for resource-hungry tests, e.g. ReactJS environments. This can be achieved with additional labels and pod anti-affinity as discussed previously. We might add this on a case-by-case basis.

Consolidated Dev and Prod

We have a relatively simple setup for a small team: dev and prod. Each environment consists of a GKE cluster and a Postgres database (and some other things not related to cost savings).

I went to a Kubernetes meetup in San Franciso in September and discovered a cool tool called vcluster. It allows you to create virtual Kubernetes clusters within the same Kubernetes cluster, so developers can get access to fully isolated Kubernetes clusters and install whatever they want inside without messing up the main cluster.

They have nice documentation, so I will just share how it impacted our cost savings. We moved from a separate GKE cluster in another project for our dev environment to a virtual cluster inside our prod GKE cluster. What that means:

We got rid of a full GKE cluster. Even not taking into account actual nodes, Google started charging a fee for cluster management recently.

We can share nodes between dev and prod clusters. Even empty nodes require around 0.5 CPU and 0.5 GB RAM to operate, so the fewer nodes, the better.

We save money on shared infrastructure, e.g. we don’t need two Grafana instances, Prometheus Operators, etc. because it is the same “physical” infrastructure and we can monitor it together. The isolation between virtual clusters happens on the namespace level and some smart renaming mechanics.

We save money by avoiding paying for extra load balancers. Vcluster allows you to share ingress controllers (and other resources you’d like to share) between clusters, a kind of parent-child relationship.

We don’t need another cloud database, we moved our dev database to the prod database instance. You don’t have to do this step, but our goal was aggressive cost savings.

We had some struggles with Identity and Access Management (IAM) set up during this migration as some functionality required a subscription to vcluster, but we found a workaround.

We understand that there are certain risks with such a setup, but we are small-scale for now and we can always improve isolation and availability concerns as we grow.

Cloud Logging

I was reviewing our billing last month and noticed something strange - daily charges for Cloud Logging even though I couldn’t remember enabling anything special like Managed Prometheus service.

Google Cloud Logging Billing

I got worried as this would mean spending almost $100/month for I don’t know what. I was also baffled why it started in the middle of the month, I thought maybe one of the developers enabled something and forgot.

After some investigation, I found what it was:

Google Cloud Logging Volume

GKE Control Plane components were generating 100GB of logs every month. The reason I saw some charges in the middle of the month is there is a free tier of 50GB, so for the first two weeks there wouldn’t be any charges, and once you cross the threshold, you start seeing it in billing.

We already had somewhat optimized setup by disabling logging for user worklods:

GKE Cloud Logging Setup

We want to have control plane logs in case there are some issues, but this was way too much. I started investigating deeper and found that the vast majority of logs are info-level logs from the API Server. Those are often very basic and don’t help much with troubleshooting.

To solve this, we added an exclusion rule to the _Default Log Router Sink to exclude info logs from the API server:

Log Router Sink Exclusion Filter

As you can see on one of the previous images, the logging generation flattened out after applying this filter and we now have GKE logging under control. I’ve also added a budget alert specifically for Cloud Logging to catch this earlier in the future.

Conclusion & Next Steps

I wanted to see how much we can achieve without relying on any committed-use discounts or reserved instances as those approaches still cost money and are associated with extra risks, depending on if you buy 1 or 3-year commitments. Now, that we reduced our costs a lot, we can consider applying committed use discounts as those will be a pretty low risk at this level of costs.

I hope this will give you a few fresh ideas on how to optimize your own infrastructure as most of these decisions can be applied to all major cloud providers.

AWS DevOps Interview Questions Ultimate Guide for 2025: Insights for Recruiters and Hiring Teams

For recruiters evaluating DevOps talent, discover the most frequently asked AWS DevOps interview questions and answers to ensure you make the best hiring choices for your team.

Introduction

Recruiting the right DevOps engineers, especially those well-versed in AWS (Amazon Web Services), is a critical task for organizations aiming to excel in the world of cloud-based software development.

This guide is designed to assist recruiters and hiring teams by offering insights into AWS DevOps interview questions and providing sample answers.

Understanding these questions and their context will enable you to make more informed hiring decisions and select the best candidates for your team.

The DevOps symbol is an infinity loop representing constant activity and improved efficiency

What is DevOps?

Before we delve into AWS DevOps specifics, it's crucial to establish a common understanding of what DevOps is.

DevOps is a methodology that bridges the gap between software development (Dev) and IT operations (Ops). It streamlines the software development lifecycle, fostering collaboration, automation, and continuous monitoring to result in faster and more reliable software releases.

DevOps engineers are sometimes referred to as the following roles: Cloud System Engineers, Cloud Architects, Systems Administrators, Network Engineers, Site Reliability Engineers (SREs), Cloud Security Engineers, Cloud Solutions Architects, Cloud Operations Engineers, and Data Engineers.

Importance of DevOps in AWS

DevOps plays a pivotal role in the AWS ecosystem by accelerating software development, deployment, and maintenance.

It leverages AWS services to optimize resource provisioning, automate infrastructure management, and enhance software deployment. This ensures the reliability and scalability of AWS-based applications.

Basic DevOps Engineer Interview Questions

As recruiters and hiring teams seeking DevOps engineers with AWS expertise, it's essential to evaluate candidates' knowledge across various key areas. Here's an overview of essential topics to consider when crafting interview questions:

Basic Concepts

Explore the core principles of DevOps.

Evaluate the advantages of AWS for DevOps.

Define infrastructure as code (IaC) and its significance.

Infrastructure as Code

How does AWS CloudFormation support infrastructure as code?

Investigate the key components of a CloudFormation template.

Understand the concept of idempotence in IaC.

Continuous Integration and Continuous Deployment (CI/CD)

What is CI/CD, and how does it relate to DevOps?

Discuss the AWS services frequently used in CI/CD within AWS DevOps.

Delve into the creation of automated code deployment pipelines in AWS.

Monitoring and Logging

Assess knowledge about AWS tools for monitoring and logging.

Explain the importance of cloud-native monitoring.

Inquire about setting up automated alerts in AWS for critical events.

Networking

Scrutinize candidates' grasp of AWS Virtual Private Cloud (VPC) and its components.

Probe their understanding of securing data in transit within AWS.

Differentiate between a network ACL and a security group in AWS.

Criteria for Passing an AWS DevOps Interview

When it comes to scoring a candidate's experience and answers in an interview, we advise paying special attention to the following criteria:

Master AWS Fundamentals

A solid understanding of AWS services is essential. Candidates should be familiar with core services such as EC2, S3, RDS, and VPC, as well as foundational knowledge of IAM, networking, and cost management.

Coding and Scripting Skills

Proficiency in scripting languages like Python, Bash, or PowerShell is crucial for automating tasks and writing efficient scripts. Candidates should also have experience with configuration management tools like Ansible or Chef.

Knowledge of Git for version control is a must, and familiarity with programming concepts can be advantageous for troubleshooting or working with microservices.

Familiarity with AWS DevOps Tools

Candidates should have hands-on experience with key AWS tools, including but not limited to:

AWS CodePipeline: For continuous integration and delivery.

AWS CloudFormation: For infrastructure as code.

AWS Elastic Beanstalk: For managing application deployments.

AWS Lambda: For serverless architectures.

AWS CloudWatch: For monitoring and logging.

AWS CodeBuild and AWS CodeDeploy: For building and deploying applications.

Knowledge of container services like ECS or EKS (Kubernetes on AWS) is increasingly important.

Up-to-Date Knowledge

Staying current with the latest trends in DevOps and AWS is non-negotiable. This includes familiarity with:

New AWS services and features (e.g., Graviton processors, EventBridge).

Emerging practices like GitOps, FinOps, and chaos engineering.

Security trends and compliance standards, such as GDPR and SOC 2.

Problem-Solving and Troubleshooting Skills

DevOps is about solving real-world challenges efficiently. Candidates should demonstrate:

Analytical thinking and the ability to debug and resolve issues quickly.

Experience with tools like AWS CloudTrail for auditing and X-Ray for distributed tracing.

Creative solutions for optimizing performance, managing costs, or improving deployment workflows.

Experience with CI/CD and Automation

Candidates should be able to design, implement, and optimize CI/CD pipelines using tools like Jenkins, GitHub Actions, or AWS-native options.

Automation of infrastructure, deployments, and scaling should be a core skill.

Soft Skills (often overlooked but critical)

Communication and teamwork are vital in DevOps roles, as they involve cross-functional collaboration.

The ability to explain complex systems or processes clearly is especially important during troubleshooting or design discussions.

Additional Considerations for Modern AWS DevOps Roles

Cloud Cost Optimization Skills: With cost control becoming a top priority, familiarity with tools like AWS Budgets, Cost Explorer, and savings plans is increasingly valued.

Security and Compliance Expertise: AWS DevOps engineers must understand encryption, least privilege principles, and compliance tools like AWS Security Hub.

Multi-Cloud or Hybrid Experience: Although AWS-focused, experience with hybrid setups or other cloud providers (Azure, GCP) is often seen as a bonus.

Frequently Asked AWS DevOps Interview Questions and Answers

To evaluate candidates effectively, you should expect clear, concise responses to interview questions.

We've provided some specific AWS DevOps interview questions that can help assess a candidate's knowledge and experience, together with sample answers:

General AWS DevOps Concepts

What is AWS DevOps, and how does it differ from traditional software development and IT operations?

Assess understanding of AWS DevOps practices emphasizing automation, collaboration, and scalability.Explain the key benefits of adopting DevOps in AWS.

Include speed, resource optimization, operational efficiency, and scalability.What are the primary challenges when implementing DevOps on AWS, and how would you address them?

Evaluate problem-solving and practical experience with AWS-specific challenges.

Infrastructure as Code (IaC)

What is Infrastructure as Code (IaC), and why is it important in AWS DevOps?

Highlight benefits like consistency, automation, and versioning.Compare AWS CloudFormation and Terraform. When would you use one over the other?

Focus on their strengths and use cases in AWS-centric versus multi-cloud environments.How do you handle dependencies and ensure idempotence in IaC?

Probe understanding of advanced IaC concepts.

CI/CD and Automation

What is CI/CD, and how do you implement it using AWS services?

Explore experience with AWS CodePipeline, CodeBuild, and third-party tools like Jenkins.Describe the key components of AWS CodePipeline and how it supports CI/CD workflows.

Evaluate understanding of CI/CD service integration.What strategies would you use to minimize deployment downtime during CI/CD?

Discuss approaches like canary releases, rolling updates, or Blue-Green deployments.What is AWS CodeDeploy, and how does it differ from AWS Elastic Beanstalk?

Compare these tools for managing deployments.

Monitoring and Logging

What is AWS CloudWatch, and how is it used for monitoring in DevOps?

Assess understanding of monitoring, custom metrics, and alerting.How would you set up real-time alerts for critical failures in an AWS environment?

Test familiarity with CloudWatch Alarms and integrations with SNS or Lambda.What is the role of distributed tracing in DevOps, and how can AWS X-Ray help?

Probe knowledge of advanced monitoring tools.How does AWS Config complement monitoring in AWS DevOps environments?

Test understanding of compliance and resource monitoring.

Security and Compliance

How do you securely manage credentials and secrets in AWS?

Evaluate experience with AWS Secrets Manager, Parameter Store, and IAM policies.What best practices do you follow for IAM role and policy management?

Assess understanding of least privilege principles and resource-level access control.How would you ensure compliance with standards like SOC 2 or GDPR in AWS?

Test knowledge of AWS Security Hub, CloudTrail, and audit procedures.What is AWS KMS, and how is it used to manage encryption in AWS DevOps?

Probe understanding of encryption and key management.

Networking

What is a Virtual Private Cloud (VPC), and how does it support AWS DevOps practices?

Test understanding of networking fundamentals.Explain the difference between a security group and a network ACL in AWS.

Evaluate knowledge of network security.How do you secure data in transit in an AWS environment?

Discuss strategies involving TLS, VPNs, and AWS Certificate Manager.

Serverless and Containerized Architectures

What is AWS Lambda, and how can it be used in a DevOps workflow?

Explore serverless event-driven architectures.Compare AWS ECS and Amazon EKS. How do you decide which one to use?

Test knowledge of container orchestration in AWS.How do you handle container image management in AWS?

Discuss the use of Amazon Elastic Container Registry (ECR).

Scaling and Performance

How do you implement auto-scaling for an AWS application?

Explore experience with Auto Scaling Groups and scaling policies.What strategies do you use to optimize costs in a DevOps workflow?

Evaluate familiarity with AWS Budgets, Reserved Instances, and Savings Plans.How would you troubleshoot performance bottlenecks in an AWS environment?

Test understanding of tools like CloudWatch, AWS X-Ray, and third-party APM tools.

Advanced Scenarios

Describe the concept of Blue-Green deployments and how they can be implemented in AWS.

Assess understanding of deployment strategies.What is AWS CloudTrail, and how does it assist in auditing and troubleshooting?

Test knowledge of activity tracking and compliance monitoring.How would you design a disaster recovery strategy for a business-critical application on AWS?

Explore knowledge of RTO/RPO, multi-region deployments, and services like S3, Glacier, and Route 53.

Modern Trends and Best Practices

What is GitOps, and how would you implement it in AWS?

Test familiarity with modern DevOps trends.How do you use event-driven architecture in AWS DevOps?

Discuss tools like EventBridge, SNS, and SQS.How do you integrate observability into your DevOps practices?

Explore concepts like logging, tracing, and metrics in a unified manner.

Best Practices for AWS DevOps Interviews

Recruiters and hiring teams should guide candidates on how to excel in AWS DevOps interviews:

Encourage clear, concise answers.

Request real-world examples or use a DevOps AWS assessment to illustrate their experience.

Look for candidates' problem-solving skills and creativity.

Emphasize the importance of effective collaboration and communication.

Seek discussions of both successes and challenges from past roles.

Brokee's Live AWS DevOps Assessments reflect real daily work

How to Make Sure a System Engineer Has Real-World Experience

Interviewers often ask a range of AWS DevOps interview questions, including scenario-based questions, technical problem-solving exercises, and questions about real-life experiences.

While it is crucial to ask these types of questions, we recommend thinking outside the box when it comes to DevOps interviewing Modern tools like Live DevOps Assessments are proven to be better for than interviews at determining whether a DevOps engineer will perform well on the job. Plus, DevOps assessments are found to reduce overall DevOps hiring costs.

Final Thoughts

In the dynamic landscape of AWS DevOps, recruiting the right talent can make or break an organization's success. Recruiters and hiring teams serve as key players in building teams that can harness the full potential of AWS while delivering high-quality software to customers.

By asking the right AWS DevOps interview questions and using the best solutions for DevOps Assessments, recruiters and hiring teams can identify candidates with the skills and experience to excel in these roles.

Plus, did you know we now offer training for DevOps Engineers? Try it for free today!

How Hands-On Lab Training Accelerates Your DevOps Learning Curve

In this article, we’ll explore why hands-on labs are so effective and how they can drastically improve your DevOps skills.

DevOps is a fast-paced, dynamic field where theoretical knowledge alone is rarely enough to succeed. To truly master the skills needed in this industry, hands-on experience is essential.

Hands-on lab training offers a practical, immersive way for DevOps engineers to accelerate their learning curve and become job-ready faster.

In this article, we’ll explore why hands-on labs are so effective and how they can drastically improve your DevOps skills.

1. Real-World Problem Solving

Learning by Doing

In DevOps, engineers face complex, real-world challenges daily. Hands-on labs simulate these real-life tasks, such as configuring a Kubernetes cluster, troubleshooting cloud infrastructure, or setting up CI/CD pipelines. This experience allows engineers to actively solve problems rather than passively learn concepts.

Why It Matters

Theoretical knowledge can only take you so far. Working on actual infrastructure and handling real problems solidifies what you’ve learned, ensuring you can apply those skills when it matters most—on the job.

Example: Many engineers use Brokee’s hands-on labs to practice AWS, Azure, and DevOps tasks that mirror real job environments.

Whether you’re an entry-level engineer or preparing for a new role, Brokee’s labs provide practical experience that accelerates your job readiness.

Brokee offers several labs and tests to practice DevOps and cloud skills, including Azure: Blob Challenge and Azure: Load Balancer

2. Builds Confidence for Day-One Readiness

Hands-On = Confidence

Many engineers struggle with confidence during their first few months on the job because they’ve never had the chance to apply what they learned in real scenarios. Hands-on labs give engineers the opportunity to practice these skills repeatedly until they are fully confident in their abilities.

Why It Matters

Confidence in your DevOps skills from day one can drastically shorten onboarding time and increase your productivity early in your career.

Companies often prefer candidates who have hands-on experience with the tools and technologies they use.

3. Mastering Tools and Platforms

Get Familiar with Industry-Standard Tools

Hands-on labs allow engineers to get comfortable using critical DevOps tools like Terraform, Ansible, Docker, Jenkins, and cloud platforms like AWS, Azure, and GCP.

Lab environments replicate real job tasks, so engineers can focus on mastering specific tools while understanding how they integrate into larger workflows.

Why It Matters

Becoming proficient with tools is crucial for DevOps roles. Hands-on labs provide the chance to not only learn new tools but to also understand how they function in complex environments.

Example: Engineers can practice setting up a continuous integration pipeline using Jenkins, deploy a containerized application with Kubernetes, or automate infrastructure with Terraform in a lab environment before applying these skills in production.

Read More: The Top DevOps Tools in 2024

4. Safe Environment to Make Mistakes

Learning Without the Pressure

One of the greatest advantages of hands-on lab training is the ability to make mistakes without real-world consequences.

In an actual job setting, errors can lead to downtime, security risks, or financial losses. In a lab, engineers can experiment, fail, and learn without the pressure of damaging live environments.

Why It Matters

The freedom to experiment helps engineers learn faster. They can try different approaches, discover what works, and learn from failures—all without impacting actual projects.

Read More: The Best DevOps Bootcamps in 2024

5. Speeds Up the Learning Curve

Accelerating Skill Development

Hands-on labs enable faster learning by giving engineers instant feedback. Instead of reading through documentation and theory, they can immediately see the results of their actions in the lab environment.

This kind of real-time feedback significantly speeds up the learning process, as engineers can adjust their approach on the fly.

Why It Matters

Learning by doing accelerates mastery of concepts and tools. Engineers gain a deep understanding of how different DevOps practices work together, which ultimately helps them become proficient more quickly than with theoretical learning alone.

6. Preparing for Certifications

Practical Experience for Exams

Certifications like AWS DevOps Engineer, Microsoft Azure DevOps, or Google Cloud Professional DevOps Engineer require not just theoretical knowledge, but also practical understanding. Hands-on labs prepare engineers for these exams by allowing them to practice the exact scenarios they’ll be tested on.

Why It Matters

While studying for certifications is important, real-world practice is what truly prepares you to pass the exams and apply the knowledge in the workplace. Hands-on labs give you the confidence and experience to tackle even the most challenging certification questions.

Example: In an AWS hands-on lab, engineers can set up auto-scaling groups, configure CloudWatch for monitoring, and use Lambda for automation—real-world tasks that they’ll likely face on the AWS DevOps Engineer certification exam.

Read More: AWS DevOps Interview Questions and Answers for 2024

7. Gaining Practical Job Experience

Simulate the Job Environment

Hands-on labs not only prepare engineers for exams but also simulate day-to-day job tasks.

These labs mirror the exact work you’ll do in a DevOps role, such as deploying cloud infrastructure, setting up monitoring systems, or configuring secure environments. The more practice you get, the more comfortable you’ll be when performing these tasks in a live environment.

Why It Matters

This kind of real-world experience is what hiring managers look for. By practicing in labs, engineers can demonstrate they are ready to step into a role without needing extensive on-the-job training.

Brokee’s live labs

Conclusion

Hands-on lab training is an invaluable tool for accelerating the DevOps learning curve.

Whether you're mastering tools, preparing for certifications, or gaining real-world job experience, these labs provide the perfect environment to learn by doing.

We currently offer 3 free labs for engineers (no credit card needed!), and after that, you can have access to our unlimited testing library for only $9 per month. Try Brokee risk-free today!

The practical experience gained from our labs will significantly boost your confidence, shorten onboarding time, and make you job-ready from day one.

Mastering Azure DevOps: Top Training Resources and Certifications to Kickstart Your Career

As businesses increasingly move to cloud-native solutions, mastering Azure DevOps has become essential for engineers aiming to boost their careers.

Whether you're starting your journey or looking to advance your skills, here’s a guide to the best Azure DevOps training resources and certifications that will help you stand out in this fast-growing field.

As businesses increasingly move to cloud-native solutions, mastering Azure DevOps has become essential for engineers aiming to boost their careers.

Whether you're starting your journey or looking to advance your skills, here’s a guide to the best Azure DevOps training resources and certifications that will help you stand out in this fast-growing field.

1. Microsoft Certified: DevOps Engineer Expert

What It Is

The Microsoft Certified: DevOps Engineer Expert certification is one of the most recognized credentials for Azure DevOps engineers. It validates your ability to combine people, processes, and technologies to deliver continuously improved products and services.

What You’ll Learn

How to design and implement DevOps processes

Using version control systems like Git

Implementing CI/CD pipelines

Managing infrastructure using Azure DevOps and tools like Terraform and Ansible

Why It’s Important

This certification proves you can create and implement strategies that improve software development lifecycles, a critical skill for Azure DevOps engineers.

Recommended Resources:

Microsoft Learning Path: Free modules on the official Microsoft site provide a structured learning path to pass the certification.

Whizlabs and Udemy Courses: These platforms offer in-depth preparation courses for this certification.

2. AZ-400: Designing and Implementing Microsoft DevOps Solutions

What It Is

AZ-400 is the exam required to earn the Microsoft Certified: DevOps Engineer Expert certification. It covers designing and implementing DevOps practices for infrastructure, CI/CD, security, and compliance.

What You’ll Learn

How to integrate source control and implement continuous integration

Strategies for automating deployments and scaling infrastructure

Monitoring cloud environments and managing incidents effectively

Why It’s Important

Passing this exam is crucial for anyone aiming to specialize in Azure DevOps. It showcases your ability to manage full lifecycle DevOps processes in Azure environments.

Recommended Resources:

Microsoft Learn: This free resource offers structured modules and practice tests.

Udemy: The AZ-400 Exam Preparation Course is a highly rated resource for detailed exam preparation.

3. LinkedIn Learning: Azure DevOps for Beginners

What It Is

This LinkedIn Learning course is an excellent introduction for beginners to Azure DevOps, covering the basics of using the platform for continuous delivery, infrastructure management, and monitoring.

What You’ll Learn

Setting up an Azure DevOps environment

Managing code repositories with Git

Implementing CI/CD pipelines using Azure Pipelines

Why It’s Important

If you’re new to DevOps or just getting started with Azure, this course provides a solid foundation for understanding the tools and practices needed to succeed.

Recommended Resources:

LinkedIn Learning Subscription: Offers access to this and thousands of other related courses.

4. Pluralsight: Azure DevOps Fundamentals

What It Is

Pluralsight offers an in-depth course that covers core Azure DevOps concepts, including project management, version control, and pipeline automation.

What You’ll Learn

How to manage Azure DevOps organizations, projects, and teams

Configuring CI/CD pipelines for automated builds and deployments

Automating infrastructure with Terraform and Azure Resource Manager

Why It’s Important

For those who already have a basic understanding of DevOps, this course dives deeper into Azure-specific functionalities, preparing you for hands-on work with Azure projects.

Recommended Resources:

Pluralsight Subscription: Provides unlimited access to this course and other DevOps-related content.

5. Azure DevOps Hands-On Labs

What It Is

Hands-on labs offer practical, real-world experience by simulating real tasks and challenges within Azure DevOps environments. Labs allow engineers to practice and test their knowledge in controlled scenarios that mirror actual job tasks.

Why It’s Important

Nothing beats hands-on experience when learning new tools. Labs allow engineers to practice and refine their skills by working on real-world problems, making them invaluable for both beginners and those preparing for certifications.

Recommended Resources:

Brokee DevOps Assessments: Brokee offers real-world cloud-based assessments that simulate job environments, helping engineers practice hands-on Azure DevOps tasks and allowing companies to assess candidates' proficiency in real-time.

6. GitHub Learning Lab: CI/CD with GitHub Actions and Azure

What It Is

GitHub Learning Lab provides an interactive guide to integrating GitHub Actions with Azure for CI/CD pipelines. It's a great way to learn how to automate workflows and deployments using GitHub alongside Azure DevOps.

What You’ll Learn

Automating code builds and deployments with GitHub Actions

Integrating GitHub repositories with Azure environments

Best practices for implementing automated workflows in cloud environments

Why It’s Important

With many organizations using GitHub for code management, this course equips you with the skills to merge GitHub's powerful automation tools with Azure's cloud infrastructure.

Recommended Resources:

GitHub Learning Lab: Free access to interactive, self-paced courses.

Conclusion

Azure DevOps is a critical skill set for anyone entering the cloud engineering space, and mastering it requires both theoretical knowledge and practical experience.

By leveraging the right training resources and certifications, you can position yourself for success in a competitive job market.

Top 10 SRE Tools Every DevOps Engineer Should Know About

As a DevOps engineer, knowing the right tools for the job is essential to managing and optimizing complex infrastructures.

Let's explore the top 10 SRE tools every DevOps engineer should be familiar with.

Site Reliability Engineering (SRE) plays a crucial role in ensuring systems are reliable, scalable, and performant.

As a DevOps engineer, knowing the right tools for the job is essential to managing and optimizing complex infrastructures.

Below are the top 10 SRE tools every DevOps engineer should be familiar with, whether they’re focused on monitoring, automation, or incident management.

1. Prometheus

What is Prometheus?

Prometheus is an open-source monitoring and alerting toolkit designed for reliability. It collects metrics from various sources, stores them in a time-series database, and allows engineers to set up powerful alerting based on predefined thresholds.

Why You Need It

Prometheus is widely adopted for system monitoring due to its scalability and flexibility. It integrates seamlessly with Kubernetes and other cloud-native environments, making it an essential tool for SREs and DevOps engineers alike.

Displaying Prometheus Metrics in Grafana

2. Grafana

What is Grafana?

Grafana is an open-source data visualization and analytics tool that integrates with Prometheus and other data sources to provide real-time dashboards.

Why You Need It

Grafana’s customizable dashboards give teams a clear visual overview of system health, performance metrics, and potential bottlenecks. This allows SREs to spot issues quickly and maintain system reliability.

Grafana Dashboard

3. Terraform

What is Terraform?

Terraform by HashiCorp is a powerful tool for Infrastructure as Code (IaC). It enables engineers to define cloud infrastructure resources using declarative code, which can be version-controlled and automated.

Why You Need It

Automating infrastructure provisioning with Terraform reduces human error and ensures consistency across environments. For SREs, this means more reliable deployments and faster recovery from incidents.

High-Level Idea of Terraform

4. Kubernetes

What is Kubernetes?

Kubernetes is the most popular container orchestration platform, used to manage and scale containerized applications across clusters.

Why You Need It

Kubernetes automates the deployment, scaling, and management of containerized applications. Its self-healing capabilities, auto-scaling, and robust ecosystem make it an indispensable tool for any SRE or DevOps engineer focused on maintaining reliability.

Kubernetes in a Nutshell

5. PagerDuty

What is PagerDuty?

PagerDuty is an incident management platform designed to help DevOps and SRE teams respond to incidents in real-time.

Why You Need It

PagerDuty integrates with monitoring tools and alerts teams when something goes wrong. It helps organize and escalate incidents, ensuring that the right people respond promptly to minimize downtime and system impact.

The PagerDuty Suite of Tools

6. Ansible

What is Ansible?

Ansible is an open-source tool for automation and configuration management. It allows for the automation of application deployment, cloud provisioning, and system configurations.

Why You Need It

SREs use Ansible to automate repetitive tasks, reducing manual intervention and minimizing configuration drift across environments. It’s essential for maintaining consistent and reliable infrastructure.

Ansible Automation Platform

7. ELK Stack (Elasticsearch, Logstash, Kibana)

What is the ELK Stack?

The ELK Stack is a combination of three tools: Elasticsearch (search and analytics engine), Logstash (log pipeline), and Kibana (visualization).

Why You Need It

This stack is perfect for log management, allowing SREs to collect, analyze, and visualize logs in real-time. With ELK, you can identify and troubleshoot issues across distributed systems, improving reliability and system observability.

Logs Web Traffic and More

8. Jenkins

What is Jenkins?

Jenkins is a popular open-source automation server used to build and manage CI/CD pipelines.

Why You Need It